As you know security is one of the key points during database administration. In MongoDB sharded clusters security between components of the cluster is provided by Internal authentication. In this tutorial I will deploy a sharded cluster and enable internal authentication by using a keyfile.

Enforcing internal authentication also enforces user access control. To connect to the replica set, clients like the mongo shell need to use a user account.

Step 1: Create a User For Administration

1 2 | [root@mongodbserv ~]# adduser mongodb [root@mongodbserv ~]# passwd mongodb |

Important Note: Create mongodb user in all servers and perform all operations with this user.

Step 2: Install MongoDB Community Edition

Using .rpm Packages (Recommended)

Configure the package management system (yum).

Create a /etc/yum.repos.d/mongodb-org-4.0.repo file so that you can install MongoDB directly using yum:

1 | vi /etc/yum.repos.d/mongodb-org-4.0.repo |

1 2 3 4 5 6 | [mongodb-org-4.0] name=MongoDB Repository baseurl=https://repo.mongodb.org/yum/redhat/$releasever/mongodb-org/4.0/x86_64/ gpgcheck=1 enabled=1 gpgkey=https://www.mongodb.org/static/pgp/server-4.0.asc |

Note: If you want to install difference version you must change 4.0 in the above content.

Install MongoDB packages

To install the latest stable version of MongoDB, issue the following command:

1 | sudo yum install -y mongodb-org |

Step 3: Disable SELINUX

If you are using SELinux, you must configure SELinux to allow MongoDB to start on Red Hat Linux-based systems (Red Hat Enterprise Linux or CentOS Linux).

Disable SELinux by setting the SELINUX setting to disabled in /etc/selinux/config.

1 | SELINUX=disabled |

Step 4: Stop Firewalld and Disable iptables

Disable and Stop Firewalld

Stop the firewall so the servers can communicate with each other.

1 2 | systemctl disable firewalld systemctl mask --now firewalld |

Disable iptables

1 | sudo /sbin/iptables -F && /sbin/iptables-save |

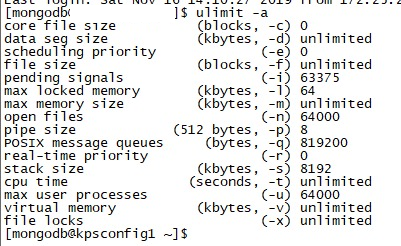

Step 5: Recommended ulimit Settings

Configure some ulimit parameters as below for mongodb.

Edit limits.conf:

1 | vi /etc/security/limits.conf |

1 2 3 4 | * soft nproc 64000 * hard nproc 64000 * soft nofile 64000 * hard nofile 64000 |

Edit 20-nproc.conf:

Edit 20-nproc.conf and update the value as below.

1 | vi /etc/security/limits.d/20-nproc.conf |

1 | * soft nproc 64000 |

- -f (file size): unlimited

- -t (cpu time): unlimited

- -v (virtual memory): unlimited

- -l (locked-in-memory size): unlimited

- -n (open files): 64000

- -m (memory size): unlimited

- -u (processes/threads): 64000

Note: You must logout and login or restart the server to activate changes.

Make sure that your ulimit settings are configured correctly. You can check current ulimit settings with the command below:

1 | ulimit -a |

Step 6: Disable Transparent Huge Pages

Before starting mongod processes, it is recommended to disable Transparent Huge Pages. Mongodb often perform poorly with THP enabled. You can use the following document:

https://docs.mongodb.com/manual/tutorial/transparent-huge-pages/

Step 7: Create Directories

Create a folder structure to store database files. The path structure will be as follows. You may want to change this path structure. Its your choice.

Note: Create directories with mongodb user.

hostname1

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | /mongodb /shA /data /configfile /shB /data /configfile /shC /data /configfile /cfg /data /configfile /logs /mongos /configfile |

hostname2

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | /mongodb /shA /data /configfile /shB /data /configfile /shC /data /configfile /cfg /data /configfile /logs /mongos /configfile |

hostname3

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | /mongodb /shA /data /configfile /shB /data /configfile /shC /data /configfile /cfg /data /configfile /logs /mongos /configfile |

Step 8: Add IP Addresses and Hostnames to /etc/hosts

We need to add hostname informations to /etc/hosts in all nodes to access between servers by the hostnames.

1 | vi /etc/hosts |

1 2 3 | 10.0.0.14 hostname1.domain hostname1 10.0.0.15 hostname2.domain hostname2 10.0.0.16 hostname3.domian hostname3 |

Step 9: Create a Keyfile

You can generate a keyfile using any method you choose. For example, the following operation uses openssl to generate a complex pseudo-random 1024 character string to use for a keyfile.

It then uses chmod to change file permissions to provide read permissions for the file owner only:

1 2 | openssl rand -base64 756 > /mongodb/keyfile chmod 400 /mongodb/keyfile |

Copy the keyfile to each server hosting the sharded cluster members. Ensure that the user running the mongod or mongos instances is the owner of the file and can access the keyfile.

Copy the file to other nodes:

1 2 | scp /mongodb/keyfile hostname2:/mongodb/keyfile scp /mongodb/keyfile hostname3:/mongodb/keyfile |

Step 10: Configure Config Servers

We will use a configuration file in this deployment so we will set :

security.keyFile to the keyfile’s path,

sharding.clusterRole to configsvr

Sample configuration file for config server:

There is a sample configuration file /etc/mongod.conf or you can use the below one according to our path structure.

Create config-cfg.conf file on each config server

1 | vi /mongodb/cfg/configfile/config-cfg.conf |

Content of the files:

hostname1:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | # where to write logging data. systemLog: destination: file logAppend: true path: "/mongodb/logs/cfg.log" # Where and how to store data. storage: dbPath: "/mongodb/cfg/data" directoryPerDB: true journal: enabled: true # how the process runs processManagement: fork: true # fork and run in background # network interfaces net: port: 27000 bindIp: hostname1,127.0.0.1 security: keyFile: /mongodb/keyfile authorization: enabled #replication: # replSetName: myconfigreplicasetname sharding: clusterRole: configsvr |

hostname2:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | # where to write logging data. systemLog: destination: file logAppend: true path: "/mongodb/logs/cfg.log" # Where and how to store data. storage: dbPath: "/mongodb/cfg/data" directoryPerDB: true journal: enabled: true # how the process runs processManagement: fork: true # fork and run in background # network interfaces net: port: 27000 bindIp: hostname2,127.0.0.1 security: keyFile: /mongodb/keyfile authorization: enabled #replication: # replSetName: myconfigreplicasetname sharding: clusterRole: configsvr |

hostname3:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | /mongodb/cfg/configfile/config-cfg.conf # where to write logging data. systemLog: destination: file logAppend: true path: "/mongodb/logs/cfg.log" # Where and how to store data. storage: dbPath: "/mongodb/cfg/data" directoryPerDB: true journal: enabled: true # how the process runs processManagement: fork: true # fork and run in background # network interfaces net: port: 27000 bindIp: hostname3,127.0.0.1 security: keyFile: /mongodb/keyfile authorization: enabled #replication: # replSetName: myconfigreplicasetname sharding: clusterRole: configsvr |

You can include additional options as required for your configuration.

For instance, our deployment members are run on different hosts so we specify the net.bindIp setting here.

Start mongod

Start the mongod on each config server.

1 | mongod --config /mongodb/cfg/configfile/config-cfg.conf --auth |

Now we have started the mongodb instance but we don’t have any authenticated users so we can do nothing!!

In this state MongoDB has an exception called “localhost exception”. By this we can create only one user and this user can be created only connecting from the localhost.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | mongo --port 27000 Implicit session: session { "id" : UUID("xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx") } MongoDB server version: 4.0.4 > use admin switched to db admin > db.createUser({user:'user1', pwd:'passwd', roles:[{role:'userAdminAnyDatabase', db:'admin'}]}) Successfully added user: { "user" : "user1", "roles" : [ { "role" : "userAdminAnyDatabase", "db" : "admin" } ] } |

When all the replica members start running, it is time to initiate replica sets.

Grant Roles:

user1 is your username.

1 2 3 | use admin db.grantRolesToUser( "user1", [ "clusterManager" ] ) db.grantRolesToUser( "user1", [ "dbOwner" ] ) |

If you want to grant root privilege execute the below command to be able to perform all operations:

1 2 | use admin db.grantRolesToUser( "user1", [ "root" ] ) |

Note: If you want to use db.updateUser command, ensure all roles is available in the command. Because when you execute db.updateUser, it replaces old permissions. Thats why I use db.grantRolesToUser().

Connect to the one of the members of the config server :

1 | mongo --port 27000 -u user1 -p --authenticationDatabase admin |

Shutdown all the config replica set members:

1 2 | use admin db.shutdownServer(); |

Add below lines to each config replica set member’s configuration file:

Write myconfigreplicasetname instead of yourreplicasetname in our example. Because we specify our replica set name as “myconfigreplicasetname” in our config file.

1 | replication: replSetName: <yourreplicasetname> |

Start mongod instance again:

1 | mongod --config /mongodb/cfg/configfile/config-cfg.conf --auth |

Initiate config server’s replica sets:

1 2 3 4 5 6 7 8 9 10 | rs.initiate( { _id: "myconfigreplicasetname", configsvr: true, members: [ { _id : 0, host : "hostname1.domain:27000" }, { _id : 1, host : "hostname2.domain:27000" }, { _id : 2, host : "hostname3.domain:27000" } ] } ) |

Step 11: Configure Shards

I will create 3 shard shA, shB and shC in my example. There will be 2 copy of each shard. Therefore, we need 9 conf file.

I will create shard shA on hostname1 and shB on hostname2 and shC on hostname3, but this does not mean shA only resides on hostname1. This means that hostname1 will be the primary node of shA and hostname2 will be the primary node of shB and hostname3 will be the primary node of shC. Other nodes of each shard will be secondary nodes.

If you want, you can create all the shards on hostname1. If you perform all operations from hostname1, primary of all shards will be hostname1.

Shard Distribution in our Example

| hostname1 | hostname2 | hostname3 |

| shA_Primary | shA_Secondary | shA_Secondary |

| shB_Secondary | shB_Primary | shB_Secondary |

| shC_Secondary | shC_Secondary | shC_Primary |

Create Shard shA on hostname1

Create a config file for shard shA:

1 | vi /mongodb/shA/configfile/shard-cfg.conf |

Content of the file:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | # where to write logging data. systemLog: destination: file logAppend: true path: "/mongodb/logs/shA.log" # Where and how to store data. storage: dbPath: "/mongodb/shA/data" directoryPerDB: true journal: enabled: true # how the process runs processManagement: fork: true # fork and run in background # network interfaces net: port: 27001 bindIp: hostname1,127.0.0.1 security: keyFile: /mongodb/keyfile authorization: enabled #replication: # replSetName: shA sharding: clusterRole: shardsvr |

Note: Copy this file to hostname2 and hostname3 and change hostname.

Start mongod

Start the mongod on each shard server specifying the –config option and the path to the configuration file.

1 | mongod --config /mongodb/shA/configfile/shard-cfg.conf --auth |

Now we have started the mongodb instance but we don’t have any authenticated users so we can do nothing!!

In this state MongoDB has an exception called “localhost exception”. By this we can create only one user and this user can be created only connecting from the localhost.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | mongo --port 27001 Implicit session: session { "id" : UUID("xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx") } MongoDB server version: 4.0.4 > use admin switched to db admin > db.createUser({user:'user1', pwd:'passwd', roles:[{role:'userAdminAnyDatabase', db:'admin'}]}) Successfully added user: { "user" : "user1", "roles" : [ { "role" : "userAdminAnyDatabase", "db" : "admin" } ] } |

Grant Roles:

user1 is your username.

1 2 3 | use admin db.grantRolesToUser( "user1", [ "clusterManager" ] ) db.grantRolesToUser( "user1", [ "dbOwner" ] ) |

If you want to grant root privilege execute the below command to be able to perform all operations:

1 2 | use admin db.grantRolesToUser( "user1", [ "root" ] ) |

Connect to the one of the members of the shard server :

1 | mongo --port 27001 -u user1 -p --authenticationDatabase admin |

Shutdown all the shard replica set members:

1 2 | use admin db.shutdownServer(); |

Add below lines to each shard replica set member’s configuration file:

Write shA instead of <yourreplicasetname> in our example.

1 | replication: replSetName: <yourreplicasetname> |

Start mongod instance again:

1 | mongod --config /mongodb/shA/configfile/shard-cfg.conf --auth |

Initiate shA:

1 2 3 4 5 6 7 8 9 10 | rs.initiate( { _id : "shA", members: [ { _id : 0, host : "hostname1.domain:27001" }, { _id : 1, host : "hostname2.domain:27001" }, { _id : 2, host : "hostname3.domain:27001" } ] } ) |

Create Shard shB on hostname2

Create a config file for shard shB:

1 | vi /mongodb/shB/configfile/shard-cfg.conf |

Content of the file:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | # where to write logging data. systemLog: destination: file logAppend: true path: "/mongodb/logs/shB.log" # Where and how to store data. storage: dbPath: "/mongodb/shB/data" directoryPerDB: true journal: enabled: true # how the process runs processManagement: fork: true # fork and run in background # network interfaces net: port: 27002 bindIp: hostname2,127.0.0.1 security: keyFile: /mongodb/keyfile authorization: enabled #replication: # replSetName: shB sharding: clusterRole: shardsvr |

Note: Copy this file to hostname1 and hostname3 and change hostname.

Start mongod

Start the mongod on each shard server specifying the –config option and the path to the configuration file.

1 | mongod --config /mongodb/shB/configfile/shard-cfg.conf --auth |

Now we have started the mongodb instance but we don’t have any authenticated users so we can do nothing!!

In this state MongoDB has an exception called “localhost exception”. By this we can create only one user and this user can be created only connecting from the localhost.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | mongo --port 27002 Implicit session: session { "id" : UUID("xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx") } MongoDB server version: 4.0.4 > use admin switched to db admin > db.createUser({user:'user1', pwd:'passwd', roles:[{role:'userAdminAnyDatabase', db:'admin'}]}) Successfully added user: { "user" : "user1", "roles" : [ { "role" : "userAdminAnyDatabase", "db" : "admin" } ] } |

Grant Roles:

user1 is your username.

1 2 3 | use admin db.grantRolesToUser( "user1", [ "clusterManager" ] ) db.grantRolesToUser( "user1", [ "dbOwner" ] ) |

If you want to grant root privilege execute the below command to be able to perform all operations:

1 2 | use admin db.grantRolesToUser( "user1", [ "root" ] ) |

Connect to the one of the members of the shard server :

1 | mongo --port 27002 -u user1 -p --authenticationDatabase admin |

Shutdown all the shard replica set members:

1 2 | use admin db.shutdownServer(); |

Add below lines to each shard replica set member’s configuration file:

Write shB instead of <yourreplicasetname> in our example.

1 | replication: replSetName: <yourreplicasetname> |

Start mongod instance again:

1 | mongod --config /mongodb/shB/configfile/shard-cfg.conf --auth |

Initiate shB:

1 2 3 4 5 6 7 8 9 10 | rs.initiate( { _id : "shB", members: [ { _id : 0, host : "hostname1.domain:27002" }, { _id : 1, host : "hostname2.domain:27002" }, { _id : 2, host : "hostname3.domain:27002" } ] } ) |

Create Shard shC on hostname3

Create a config file for shard shC:

1 | vi /mongodb/shC/configfile/shard-cfg.conf |

Content of the file:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | # where to write logging data. systemLog: destination: file logAppend: true path: "/mongodb/logs/shC.log" # Where and how to store data. storage: dbPath: "/mongodb/shC/data" directoryPerDB: true journal: enabled: true # how the process runs processManagement: fork: true # fork and run in background # network interfaces net: port: 27003 bindIp: hostname3,127.0.0.1 security: keyFile: /mongodb/keyfile authorization: enabled #replication: # replSetName: shC sharding: clusterRole: shardsvr |

Note: Copy this file to hostname1 and hostname2 and change hostname.

Start mongod

Start the mongod on each shard server specifying the –config option and the path to the configuration file.

1 | mongod --config /mongodb/shC/configfile/shard-cfg.conf --auth |

Now we have started the mongodb instance but we don’t have any authenticated users so we can do nothing!!

In this state MongoDB has an exception called “localhost exception”. By this we can create only one user and this user can be created only connecting from the localhost.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | mongo --port 27003 Implicit session: session { "id" : UUID("xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx") } MongoDB server version: 4.0.4 > use admin switched to db admin > db.createUser({user:'user1', pwd:'passwd', roles:[{role:'userAdminAnyDatabase', db:'admin'}]}) Successfully added user: { "user" : "user1", "roles" : [ { "role" : "userAdminAnyDatabase", "db" : "admin" } ] } |

Grant Roles:

user1 is your username.

1 2 3 | use admin db.grantRolesToUser( "user1", [ "clusterManager" ] ) db.grantRolesToUser( "user1", [ "dbOwner" ] ) |

If you want to grant root privilege execute the below command to be able to perform all operations:

1 2 | use admin db.grantRolesToUser( "user1", [ "root" ] ) |

Connect to the one of the members of the shard server :

1 | mongo --port 27003 -u user1 -p --authenticationDatabase admin |

Shutdown all the shard replica set members:

1 2 | use admin db.shutdownServer(); |

Add below lines to each shard replica set member’s configuration file:

Write shC instead of <yourreplicasetname> in our example.

1 | replication: replSetName: <yourreplicasetname> |

Start mongod instance again:

1 | mongod --config /mongodb/shC/configfile/shard-cfg.conf --auth |

Initiate shC:

1 2 3 4 5 6 7 8 9 10 | rs.initiate( { _id : "shC", members: [ { _id : 0, host : "hostname1.domain:27003" }, { _id : 1, host : "hostname2.domain:27003" }, { _id : 2, host : "hostname3.domain:27003" } ] } ) |

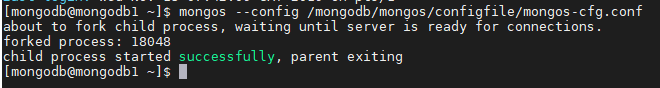

Step 12: Start Mongoses

Now it’s time to run mongoses. There is no replica for mongoses. But you can multiplies mongoses. I will create 3 mongoses in our example.

Create a mongos file on all mongos servers:

1 | vi /mongodb/mongos/configfile/mongos-cfg.conf |

mongos config file is like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | # where to write logging data. systemLog: destination: file logAppend: true path: "/mongodb/logs/mongos.log" # how the process runs processManagement: fork: true # fork and run in background # network interfaces net: port: 27017 bindIp: hostname1,127.0.0.1 maxIncomingConnections: 5000 security: keyFile: /mongodb/keyfile sharding: configDB: "myconfigreplicasetname/hostname1.domain:27000,hostname2.domain:27000,hostname3.domain:27000" |

Note: Copy this file to other mongos servers and change hostnames.

To run the mongos process:

1 | mongos --config /mongodb/mongos/configfile/mongos-cfg.conf |

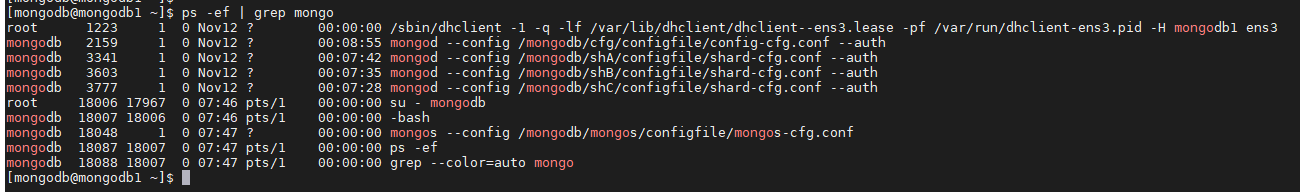

Step 13: Check All Mongos Components Started

Step 14: Connect to mongos and add shards

1 | mongo --port 27017 -u user1 -p --authenticationDatabase admin |

1 2 3 | sh.addShard( "shA/hostname1.domain:27001,hostname2.domain:27001,hostname3.domain:27001") sh.addShard( "shB/hostname1.domain:27002,hostname2.domain:27002,hostname3.domain:27002") sh.addShard( "shC/hostname1.domain:27003,hostname2.domain:27003,hostname3.domain:27003") |

Note: shA,shB and shC our replica set names. If your replica set names is different, change the script above according to your replica set names.

Check sharded cluster status:

Connect mongos and run below command.

1 | sh.status() |

The sharded replica set configuration is done. Now you can restore a dump and start working on dbs 🙂

You may want to read below articles:

“Back Up a Sharded Cluster with File System Snapshots“,

“Automatizing backup process on sharded clusters“,

“Restore a Sharded Cluster with Database Dumps in MongoDB“

![]()

Database Tutorials MSSQL, Oracle, PostgreSQL, MySQL, MariaDB, DB2, Sybase, Teradata, Big Data, NOSQL, MongoDB, Couchbase, Cassandra, Windows, Linux

Database Tutorials MSSQL, Oracle, PostgreSQL, MySQL, MariaDB, DB2, Sybase, Teradata, Big Data, NOSQL, MongoDB, Couchbase, Cassandra, Windows, Linux