Today I will tell you How To Create A PostgreSQL Cluster With Patroni. I will be using Centos 7 as the operating system.

First of all, we will need 4 virtual servers with Centos 7 installed. You can access Centos 7 installation from the link below.

1 | https://dbtut.com/index.php/2022/02/13/centos-7-installation/ |

1 2 3 4 5 | HOSTNAME IP ADDRESS pg_node1 192.168.1.18 Patroni ve PostgreSQL pg_node2 192.168.1.19 Patroni ve PostgreSQL etcd 192.168.1.20 ETCD Server haproxy 192.168.1.21 HA Proxy |

2. We install the epel repo on all our servers.

1 2 3 | $ sudo yum -y install epel-release $ sudo yum -y install centos-release-scl-rh $ sudo yum -y update |

3. Then we restart our servers.

1 | $ sudo shutdown -r now |

4. Install PostgreSQL 12 on pg_node1 and pg_node2.

1 2 | $ sudo yum -y install https://download.postgresql.org/pub/repos/yum/reporpms/EL-7-x86_64/pgdg-redhat-repo-latest.noarch.rpm $ sudo yum -y install postgresql12-server postgresql12 postgresql12-devel |

5. We install Patroni on pg_node1 and pg_node2.

1 | $ sudo yum -y install https://github.com/cybertec-postgresql/patroni-packaging/releases/download/1.6.5-1/patroni-1.6.5-1.rhel7.x86_64.rpm |

6. We create a config file for Patroni on pg_node1 and pg_node2.

1 | $ sudo cp -p /opt/app/patroni/etc/postgresql.yml.sample /opt/app/patroni/etc/postgresql.yml |

We enter the configuration file with vi and edit it as follows.

1 | $ sudo vi /opt/app/patroni/etc/postgresql.yml |

For pg_node1 :

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 | scope: postgres namespace: /pg_cluster/ name: pg_node1 restapi: listen: 192.168.1.18:8008 connect_address: 192.168.1.18:8008 etcd: host: 192.168.1.20:2379 bootstrap: dcs: ttl: 30 loop_wait: 10 retry_timeout: 10 maximum_lag_on_failover: 1048576 postgresql: use_pg_rewind: true use_slots: true initdb: - encoding: UTF8 - data-checksums pg_hba: - host replication replicator 127.0.0.1/32 md5 - host replication replicator 192.168.1.18/0 md5 - host replication replicator 192.168.1.19/0 md5 - host all all 0.0.0.0/0 md5 users: admin: password: admin options: - createrole - createdb postgresql: listen: 192.168.1.18:5432 connect_address: 192.168.1.18:5432 data_dir: /var/lib/pgsql/12/data bin_dir: /usr/pgsql-12/bin pgpass: /tmp/pgpass authentication: replication: username: replicator password: replicator superuser: username: postgres password: postgres tags: nofailover: false noloadbalance: false clonefrom: false nosync: false |

For pg_node2:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 | scope: postgres namespace: /pg_cluster/ name: pg_node2 restapi: listen: 192.168.1.19:8008 connect_address: 192.168.1.19:8008 etcd: host: 192.168.1.20:2379 bootstrap: dcs: ttl: 30 loop_wait: 10 retry_timeout: 10 maximum_lag_on_failover: 1048576 postgresql: use_pg_rewind: true use_slots: true initdb: - encoding: UTF8 - data-checksums pg_hba: - host replication replicator 127.0.0.1/32 md5 - host replication replicator 192.168.1.18/0 md5 - host replication replicator 192.168.1.19/0 md5 - host all all 0.0.0.0/0 md5 users: admin: password: admin options: - createrole - createdb postgresql: listen: 192.168.1.19:5432 connect_address: 192.168.1.19:5432 data_dir: /var/lib/pgsql/12/data bin_dir: /usr/pgsql-12/bin pgpass: /tmp/pgpass authentication: replication: username: replicator password: replicator superuser: username: postgres password: postgres tags: nofailover: false noloadbalance: false clonefrom: false nosync: false |

7. We install etcd on the etcd server.

1 | $ sudo yum -y install etcd |

8. After the installation is finished, we make the following changes in the configuration file.

1 2 3 4 5 6 7 8 9 10 11 | $ sudo vi /etc/etcd/etcd.conf [Member] ETCD_LISTEN_PEER_URLS="http://192.168.1.20:2380,http://localhost:2380" ETCD_LISTEN_CLIENT_URLS="http://192.168.1.20:2379,http://localhost:2379" [Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.1.20:2380" ETCD_ADVERTISE_CLIENT_URLS="http://192.168.1.20:2379" ETCD_INITIAL_CLUSTER="default=http://192.168.1.20:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" |

9. We start the etcd service.

1 2 3 | $ sudo systemctl enable etcd $ sudo systemctl start etcd $ sudo systemctl status etcd |

10. After starting the etcd service, we start the Patroni service in pg_node1 and pg_node2.

1 2 3 | $ sudo systemctl enable patroni $ sudo systemctl start patroni $ sudo systemctl status patroni |

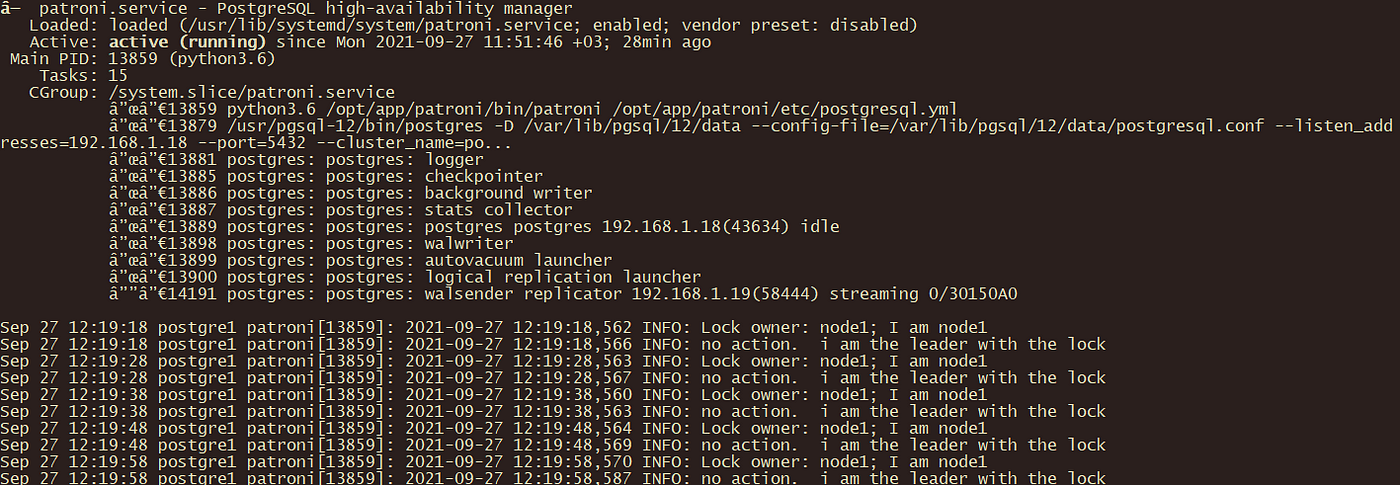

pg_node1 :

11. We install HAProxy on the haproxy server.

1 | $ sudo yum -y install haproxy |

12. After the installation is finished, the configuration file is backed up and arranged as follows.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 | $ sudo cp -p /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg_old $ sudo vi /etc/haproxy/haproxy.cfg global log 127.0.0.1 local2 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4000 user haproxy group haproxy daemon stats socket /var/lib/haproxy/stats defaults mode tcp log global option httplog option dontlognull option http-server-close option forwardfor except 127.0.0.0/8 option redispatch retries 3 timeout http-request 10s timeout queue 1m timeout connect 4s timeout client 30m timeout server 30m timeout http-keep-alive 10s timeout check 5s maxconn 3000 listen stats mode http bind *:7000 stats enable stats uri / listen postgres bind *:5000 option httpchk http-check expect status 200 default-server inter 3s fall 3 rise 2 on-marked-down shutdown-sessions server pg_node1 192.168.1.18:5432 maxconn 1000 check port 8008 server pg_node2 192.168.1.19:5432 maxconn 1000 check port 8008 |

13. We start the HAProxy service.

1 2 3 | $ sudo systemctl start haproxy $ sudo systemctl enable haproxy $ sudo systemctl status haproxy |

If the HAProxy service cannot be started, we can check the errors in the configuration file with the following command.

1 | $ sudo haproxy -c -V -f /etc/haproxy/haproxy.cfg |

When we open the address http://192.168.1.21:7000 in the browser, we will see a haproxy panel like the one below. As can be seen here, pg_node1 is the master and pg_node2 is the slave.

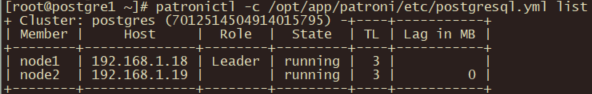

We can also check the cluster status, role and members with the following command.

1 | $ sudo patronictl -c /opt/app/patroni/etc/postgresql.yml list |

We have completed the cluster setup with Patroni. See you in my other articles.

![]()

Database Tutorials MSSQL, Oracle, PostgreSQL, MySQL, MariaDB, DB2, Sybase, Teradata, Big Data, NOSQL, MongoDB, Couchbase, Cassandra, Windows, Linux

Database Tutorials MSSQL, Oracle, PostgreSQL, MySQL, MariaDB, DB2, Sybase, Teradata, Big Data, NOSQL, MongoDB, Couchbase, Cassandra, Windows, Linux

I have referred above documentation with 4 machines having CentOS7, please refer below installed packages/ libraries as mentioned below

Strange part is both partroni nodes are having different status logs. The file coming in logs of node1 “/var/lib/pgsql/15/data/postgresql.conf” is not present in node2, even folder “/var/lib/pgsql/15/data” is not present.

node 1

patroni.x86_64 1.6.5-1.rhel7

postgresql15.x86_64 15.2-1PGDG.rhel7

postgresql15-devel.x86_64 15.2-1PGDG.rhel7

postgresql15-libs.x86_64 15.2-1PGDG.rhel7

postgresql15-server.x86_64 15.2-1PGDG.rhel7

node 2

patroni.x86_64 1.6.5-1.rhel7

postgresql15.x86_64 15.2-1PGDG.rhel7

postgresql15-devel.x86_64 15.2-1PGDG.rhel7

postgresql15-libs.x86_64 15.2-1PGDG.rhel7

postgresql15-server.x86_64 15.2-1PGDG.rhel7

node 3

etcd.x86_64 3.3.11-2.el7.centos

node 4

haproxy.x86_64 1.5.18-9.el7_9.1

—————————————

—————————————

Refer below status from each node:

node 1 status

patroni.service – PostgreSQL high-availability manager

Loaded: loaded (/usr/lib/systemd/system/patroni.service; enabled; vendor preset: disabled)

Active: active (running) since Mon 2023-08-21 17:33:52 IST; 6min ago

Main PID: 236874 (python3.6)

Tasks: 5

Memory: 19.8M

CGroup: /system.slice/patroni.service

└─236874 python3.6 /opt/app/patroni/bin/patroni /opt/app/patroni/etc/postgresql.yml

Aug 21 17:40:43 patroni[236874]: Mock authentication nonce: 46384283d89d7a56320d5d1c488e90a400e61e45ef9ed7d6f081c0574e57a893

Aug 21 17:40:43 patroni[236874]: 2023-08-21 17:40:43,146 INFO: Lock owner: None; I am pg_node1

Aug 21 17:40:43 patroni[236874]: 2023-08-21 17:40:43,156 INFO: Lock owner: None; I am pg_node1

Aug 21 17:40:43 patroni[236874]: 2023-08-21 17:40:43,159 INFO: starting as a secondary

Aug 21 17:40:43 patroni[236874]: 2023-08-21 12:10:43.421 GMT [237946] LOG: unrecognized configuration parameter “wal_keep_segments” in file “/var/lib/pgsql/15/data/postgresql.conf” line 16

Aug 21 17:40:43 patroni[236874]: 2023-08-21 12:10:43.421 GMT [237946] FATAL: configuration file “/var/lib/pgsql/15/data/postgresql.conf” contains errors

Aug 21 17:40:43 patroni[236874]: 2023-08-21 17:40:43,432 INFO: postmaster pid=237946

Aug 21 17:40:43 patroni[236874]: 172.27.55.31:5432 – no response

Aug 21 17:40:43 patroni[236874]: 2023-08-21 17:40:43,444 INFO: Lock owner: None; I am pg_node1

Aug 21 17:40:43 patroni[236874]: 2023-08-21 17:40:43,447 INFO: failed to start postgres

—————————————

—————————————

node 2 status

patroni.service – PostgreSQL high-availability manager

Loaded: loaded (/usr/lib/systemd/system/patroni.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2023-08-18 12:28:51 IST; 3 days ago

Main PID: 5924 (python3.6)

Tasks: 5

Memory: 21.6M

CGroup: /system.slice/patroni.service

└─5924 python3.6 /opt/app/patroni/bin/patroni /opt/app/patroni/etc/postgresql.yml

Aug 21 17:40:51 patroni[5924]: 2023-08-21 17:40:51,778 INFO: Lock owner: None; I am pg_node2

Aug 21 17:40:51 patroni[5924]: 2023-08-21 17:40:51,780 INFO: waiting for leader to bootstrap

Aug 21 17:41:01 patroni[5924]: 2023-08-21 17:41:01,779 INFO: Lock owner: None; I am pg_node2

Aug 21 17:41:01 patroni[5924]: 2023-08-21 17:41:01,780 INFO: waiting for leader to bootstrap

Aug 21 17:41:11 patroni[5924]: 2023-08-21 17:41:11,779 INFO: Lock owner: None; I am pg_node2

Aug 21 17:41:11 patroni[5924]: 2023-08-21 17:41:11,781 INFO: waiting for leader to bootstrap

Aug 21 17:41:21 patroni[5924]: 2023-08-21 17:41:21,778 INFO: Lock owner: None; I am pg_node2

Aug 21 17:41:21 patroni[5924]: 2023-08-21 17:41:21,780 INFO: waiting for leader to bootstrap

Aug 21 17:41:31 patroni[5924]: 2023-08-21 17:41:31,779 INFO: Lock owner: None; I am pg_node2

Aug 21 17:41:31 patroni[5924]: 2023-08-21 17:41:31,780 INFO: waiting for leader to bootstrap

—————————————

—————————————

node 3 status

etcd.service – Etcd Server

Loaded: loaded (/usr/lib/systemd/system/etcd.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2023-08-18 11:35:05 IST; 3 days ago

Main PID: 46856 (etcd)

Tasks: 18

Memory: 118.3M

CGroup: /system.slice/etcd.service

└─46856 /usr/bin/etcd –name=default –data-dir= –listen-client-urls=http://:2379,http://localhost:2379

Aug 20 12:40:54 etcd[46856]: saved snapshot at index 400004

Aug 20 12:40:54 etcd[46856]: compacted raft log at 395004

Aug 20 17:27:08 etcd[46856]: segmented wal file default.etcd/member/wal/0000000000000001-000000000006b47b.wal is created

Aug 21 00:47:09 etcd[46856]: start to snapshot (applied: 500005, lastsnap: 400004)

Aug 21 00:47:09 etcd[46856]: saved snapshot at index 500005

Aug 21 00:47:09 etcd[46856]: compacted raft log at 495005

Aug 21 12:53:25 etcd[46856]: start to snapshot (applied: 600006, lastsnap: 500005)

Aug 21 12:53:25 etcd[46856]: saved snapshot at index 600006

Aug 21 12:53:25 etcd[46856]: compacted raft log at 595006

Aug 21 12:53:36 etcd[46856]: purged file default.etcd/member/snap/0000000000000002-00000000000186a1.snap successfully

—————————————

—————————————

node 4 status

haproxy.service – HAProxy Load Balancer

Loaded: loaded (/usr/lib/systemd/system/haproxy.service; enabled; vendor preset: disabled)

Active: active (running) since Thu 2023-08-17 20:16:50 IST; 3 days ago

Main PID: 133274 (haproxy-systemd)

Tasks: 3

Memory: 1.9M

CGroup: /system.slice/haproxy.service

├─133274 /usr/sbin/haproxy-systemd-wrapper -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid

├─133275 /usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -Ds

└─133276 /usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -Ds

Warning: Journal has been rotated since unit was started. Log output is incomplete or unavailable.

—————————————

—————————————