In this article, we will move a csv file we created, to elasticsearch with logstash. You can also move TXT files to elasticsearch in the same way.

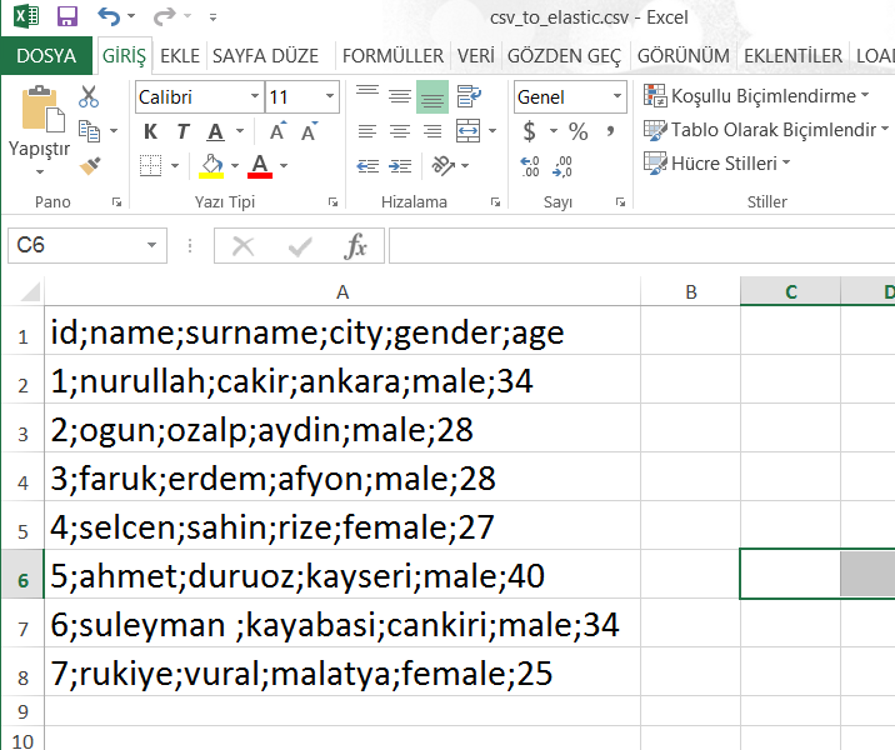

We create the CSV file as follows and copy it to the /home/elastic directory on the server where logstash is installed. You can use the following articles to install logstash and elasticsearch.

“How To Install Elasticsearch On Centos“,

“How To Install Logstash On Centos”

After creating the CSV file, we go to a directory and create a configuration file with the contents as follows.

1 2 | cd /etc/logstash/conf.d/ vi csv_to_elastic.conf |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 | input { file { # the path where csv file located path => "/home/elastic/csv_to_elastic.conf" start_position => "beginning" sincedb_path => "/dev/null" } } filter { csv { # seperator in the csv file separator => ";" #columns in the csv file columns => ["id","name","surname","city","gender","age"] } } output { elasticsearch { hosts => "write_your_logstash_ip" index => "csv_test_index" # we write our index name in here. #document_type => "%{type}" #If you want to move the changes in the CSV file to the elasticsearch continuously by logstash, you must specify a unique column as below. Otherwise the below line is not necessary. document_id => "%{id}" user => write_your_logstash_username password => write_your_logstash_username_password } } |

Then we move the csv file to elasticsearch with the command below.

1 2 | /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/csv_to_elastic.conf --path.settings=/etc/logstash/ |

If you want to move the changes in the CSV file to the elasticsearch continuously by logstash, you must execute this command with nohup like below.

1 2 | nohup /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/csv_to_elastic.conf --path.settings=/etc/logstash/ & |

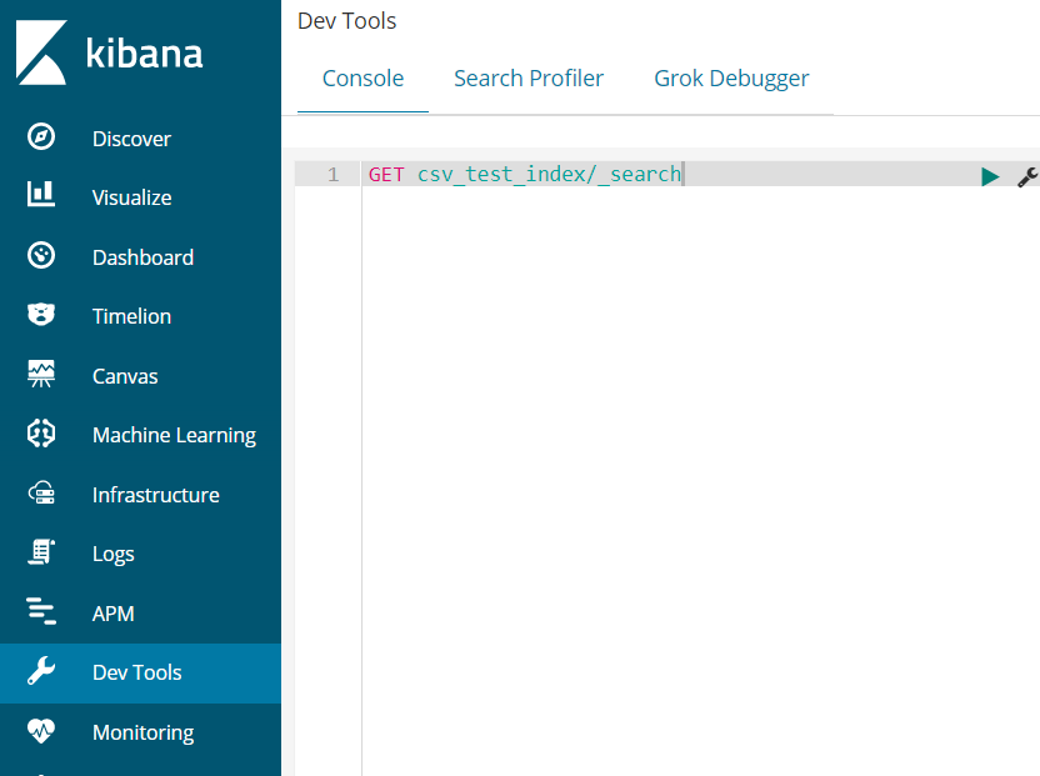

Then we can see the records on kibana by typing GET command to Devtools as below.

You can access other articles from the menu to get more information about ELK.

![]()

Database Tutorials MSSQL, Oracle, PostgreSQL, MySQL, MariaDB, DB2, Sybase, Teradata, Big Data, NOSQL, MongoDB, Couchbase, Cassandra, Windows, Linux

Database Tutorials MSSQL, Oracle, PostgreSQL, MySQL, MariaDB, DB2, Sybase, Teradata, Big Data, NOSQL, MongoDB, Couchbase, Cassandra, Windows, Linux